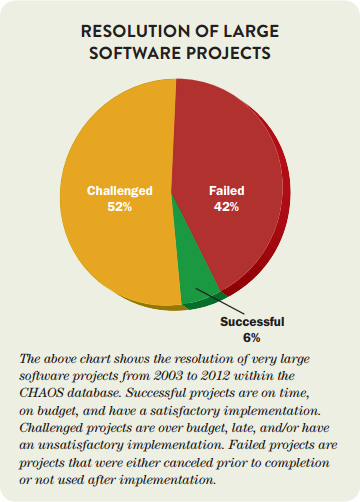

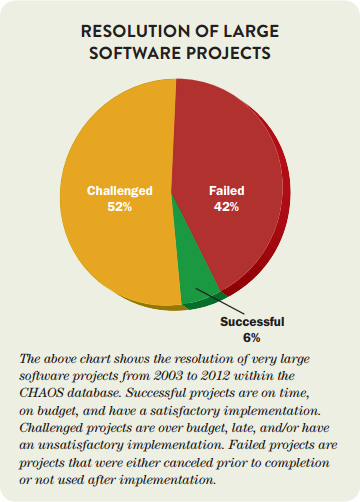

Project failure is very real

Standish Group research shows ‘Of 3,555 projects from 2003 to 2012 that had labor costs of at least $10 million, only 6.4% were successful‘. 41% were total failures and the rest vastly over-budget or did not meet expectations.

tl;dr “This article summarizes my twenty-five years in the Software Development industry. Project failure is commonplace today, Technology Fragmentation is a key cause.”

1989 -> 1999: One Project Failure – mostly PowerBuilder/ Oracle

From a summer engineer in 1989 to leading a project rescue in 1999 only one project failed. Seriously. (Only failure was with a Big 5 IT consultancy, staffed with mostly non-technical resources who did not want to be on that particular project).

All projects from 1994->1999 were PowerBuilder/ Oracle. Many people became experts in them and there were no additional frameworks. Virtually every project used similar architectural techniques.

During this period a small percentage of individuals appeared essential to some projects. At a minimum some hands-on developers undoubtedly shaved man-years off project costs. At least twice I saw several contractors almost certainly rescue a project; for the most part they did this by training/ leveraging existing staff, not super-human 80+ hour week coding.

1999->2001: Fought off Failures – Java + major Frameworks:

Technically I had only one outright failure with Java; when I opted not to burn-out and resigned from an insanely well paying contract. They burned through ~$10m in six months.

On the first two Java projects I averaged ~80 hours per week, exceeded 100 hours per week over one seven week period and even had some 24 hour days. I was the main technical resource, unfortunately hired late in their SDLCs. Developers lack of knowledge with the entire technology stack was a core issue. This required me to learn quickly and work unsustainable hours to stabilize projects/ educate others.

On these successful projects several developers followed close behind my lead – it was a team effort to succeed against the unknown technologies, but only between ~20->30% of the team in each case. A significant problem was the number of technologies to learn.

With PowerBuilder/ Oracle even the weakest team members were somewhat competent in one of the two technologies, and they generally soon improved (having lots of other people to learn from). With Java and its increasing number of frameworks/ app-servers/ etc it was not uncommon for a project to only have one expert per framework/ tool. This meant several people became critical to project success. If they had bluffed their way through the interview their area was a ticking time-bomb. With a plethora of frameworks it is very hard to technically screen all candidates; unless an expert is used for interviews bluffers can be very hard to weed out.

Some Stability with .Net: 2002-> 2007

My first .Net project failed outright, but was my only outright failure with .Net until ~2012. No one understood the technology on our first project; about a year in I was making great breakthroughs, solving most of the long standing issues. Unfortunately due to missing long-term deadlines our strong manager was ousted was replaced with a ‘yes-man’; we disagreed and he soon ousted me. That project failed within a year and I received several supportive emails from client staff. Approaching $10m dollars of tax-payer money was wasted, subsequently I have read many news stories slamming IT at that major branch of the Government (employs ~300,000 people).

Once up to speed with .Net virtually every project called me a ‘superstar’, ‘insanely productive’ etc and I did not see a single failure. Unfortunately there was plenty of evening and weekend work to extinguish fires/ meet deadlines.

Why were these projects all successful? We knew the entire technology stack. In particular I knew .Net and Oracle/ SQL Server very well; this enabled extinguishing fires quickly and permitted time to educate their developers. Many were ‘Over-the-Top’ thankful to me for taking the time to assist them (I was glad to help/educate!).

Stress with .Net: 2007 -> Present

By 2007 I still had no failed .Net projects where I had control but most were stressful; typically from overwhelming amounts of evening/ weekend work.

In ~2007 the .Net market really exploded bringing three major issues:

- Quantity of frameworks sky-rocketed

- Quality of frameworks reduced

- Hiring quality people became became harder

By 2007 most projects I arrived at had a fair number of frameworks/ tooling: Microsoft Patterns and Practices, ASP.Net Membership Provider, Log4Net and NUnit were particularly popular. As time progressed ORMs came into the mix; I have worked with at least five ORMs so far. Templating/ code generation frameworks and IDE plug-ins like Re-Sharper were also popular.

Few new technologies save time in the short term. Most frameworks/tools only reduce time/money/complexity the second or third time used. This is a well documented fact. A rule of mine has to been to never use more than two new technologies on a single project. Learning curves and risks are just too great.

~2007 -> 2012 was my ‘Project-Rescue Phase’. The majority required enormous effort to understand the technology stack and generally battles with managers/ architects to stabilize. Typically removing unnecessary technologies and performing major refactorings to simplify code/ make it testable via Continuous Integration.

On my final ‘project-rescue’ contract: within three weeks we met a deadline despite the team having zero to show for the previous three months. Their Solution Architect left them a high level design document littered with fashionable buzzwords; nothing useful had been produced. Two previous architects made no progress; including the original solution architect. I was their forth architect in about three months. One other developer and I began from scratch coding everything to meet phase 1 in three weeks; the other five people did little but heckle. The consulting company I assisted was still being difficult so I left them to it; they lost that client. Being fair they put something into production, but it took three times than longer and was a terrible product. They burned through three more architects during that time.

Performance Tuning: 2010

Around 2010 I advertised for and landed about ten performance tuning/ defect fixing contracts. Massive stress but great intellectual challenges fixing issues customers could not squash. I had a 100% success rate with these, taking a maximum of four days 🙂

An opportunity to observe many systems over a short period. Unnecessary Complexity was a constant. Frameworks and trendy development techniques being primary offenders. One customer had a mix of Reflection and .Net Remoting that hindered debugging most of their code base for years. I removed that problem in ~twenty minutes which stunned their coders – they were amazed with wide open eyes and gaping mouths cartoon style 🙂 [Topic-change: this is where experience counts and that minuscule piece of work was in the 100x Developer zone. Such times are rare, do not let anyone tell you that they are consistently a 10x developer.]

100% Failed .Net Projects: 2012 -> Present

2012 forward I decided to stop the ‘workaholic rescue’ thing and try to talk sense into managers/ architects/ stakeholders. This was ineffective. Two projects failed outright and the third is a Death-March. Stakeholders believe all is fine as they ‘tick-off progress boxes’ but reality is a long way from their current perception. [Update Feb 2014: They are now at least $50m over budget]

Two of these projects suffered from ‘resume-driven architectures’; the other wishful-thinking timescale-wise, but they hired about two hundred Indian contractors to compensate which always works (not). I was tempted to give each member of the leadership team three copies of the Mythical Man-Month; three copies so they could read it three times faster.

Quantity Up/ Quality Down for additional Frameworks and Tooling:

From 1989 -> ~1998 the number of technologies was modest.

About 1999 the Internet-Effect really began. Ideas took center-stage in the early days. Certainly in the Java world many were rushing to use latest techniques they had just read about online: EJBs, J2EE, Distributed Processing, Design Patterns, UML etc… Most teams were crippled by senior staff spending their time in these trendy areas rather than focusing on business needs (aka meta-work). This coincides with the beginning of my ‘project rescues’ and being told way too often that I was “hyper-productive compared to the rest of the team”.

By ~2007 open source frameworks and tools were center-stage in most projects. Since then we have seen exponential growth and large companies (Sun, Microsoft, even Google) proliferated their dev spaces with low quality framework/tool after low quality framework/tool presumably hoping some would stick. Apple is one of the few to exercise real restraint. The Patterns and Practices group within Microsoft is particularly shameful example most of us are familiar with.

Over Twenty Languages/Frameworks/ Tools is now common?

It is tough to single out one project, but below I quickly listed forty-two basic technologies/ core concepts a sub-project at one company used:

“VS 2012, .Net 4+, html5, css3, JavaScript, SPA/ MVC concepts, Backbone, Marionette, Underscore, QUnit, Sinon, Blanket, RequireJS, JSLint, JQuery, Bootstrap, SQL Server, SSIS, ASP.Net Web API, OData, WCF, Pure REST, JSON, EF5, EDMX, Moq, Unity DI, Linq, Agile/Scrum, Rallydev, TFS Source Control, TFS Build, MSBuild scripts, TFS Work Item Management, TFS Server 2010/ 2012, ADSI, SSO, IIS, FxCop, StyleCop, Design Patterns (classic and web)”

Beyond the buzzwords virtually every area of the application was custom implemented/ modified framework rather than taking standard approaches. It was certainly job-security and helped lock out anyone new to team.

That is just one of three projects since 2012 where I “complained three times, was not listened to so walked away as calmly as possible rather than fight like I used to”.

Resume-driven Architectures / Ego-driven Development

Why do most contemporary projects so many frameworks and tools? I see three key drivers that happen during the Solution Architecture phase:

- Strong External Influence

- Resume Driven Architectures

- Ego Driven Development

Strong External Influence is a key driver. SOA appearing on magazine covers, Microsoft MVPs all singing the same tune etc. Let’s look at how these work.. What appears on magazine covers is driven by the major advertisers. SOA sold more servers, hence it was pushed on us. Many friends are MVPs so I must take care in explaining them: many try hard to stay independent but most are influenced but their MVP sponsor to publish material around certain topic. Over the years I have seen many lose their MVP status and generally it was after outbursts against Microsoft, or they stopped producing material that Microsoft Marketing wishes to see. Apologies to MVPs, but you all know this is the truth.

Resume Driven Architectures is the Solution Architect desiring certain buzzwords on his/her resume to boost their own career, and/or being insecure about finding their next role without the latest buzzwords. On one project I had to leave early the Solution Architect mandated an ESB for a company with under 2,000 employees! Insanity. Of course they failed outright, but not before going three times over schedule and having almost 200% turnover in their contract positions during a six month period! It is not fair to single out any one individual; we have all seen a platoon sized number of such people over our careers.

Ego Driven Development: Bad managers tend to compare ‘number of direct reports’ when trying to impress one-another. Bad architects do the same thing, just with latest buzzwords.

What needs to happen?

With fewer technologies one or two key players can learn them all and stabilize a project. This is not feasible with over forty technologies.

Already in the JavaScript community we are seeing a backlash against needing large numbers of frameworks, but this is causing further fragmentation. A core concept of AngularJS (and others) is to not rely on a plethora of other frameworks. Of course early stages of learning ‘stand-alone’ frameworks like AngularJS are tough. Frameworks generally do not save time until the second or third project we use them. We could learn AngularJS but what if our next project does not use it? Time wasted, likely no efficiency gained.

No-brainier: Reduce additional frameworks

Doh, virtually all of us realize this! The problem is how? Personally I am researching Angular and Node with the intent of waiting until a suitable position appears. This approach vastly limits the projects we can work on. [Update: I ended up taking a Jenkins/ CI stabilization job close to home but eventually did get use Angular there.]

Personal experience shows that once a project with a vast number of frameworks is given the green light it takes a Herculean Effort to even tweak its Solution Architecture. As independent contractors we can avoid clearly-crazy projects including those loaded with buzzwords. Unfortunately that limits the projects we can work on. It may keep us sane though; I believe:

“Beyond two new technologies project success is inversely proportional to their combined complexity”

Ensure the Solution Architect is Hands-on

I used to believe this was a perfect solution, if only Architects had to build what they preached then they would constrain Architectures to the bounds of reality. Unfortunately this appears not to be universally the case; perhaps it reigns them in a small amount? I have witnessed more than one case of someone attempt to implement their own bizarre architecture.

In the worst case having to implement themselves, must reign in an Architect’s craziest ideas. Personally I shy away from pure architecture, especially above multiple teams. Before now when under pressure I have resorted to semi-bluffing and palming quickly cobbled up ideas off onto others safe in the knowledge I did not have to implement. I soon became cognizant of what I was doing and brought it to a swift halt. Do you think others will be so honest? Let’s try to ensure Architects are on implementation teams.

Ignore vendor influence

Guy Kawasaki has a lot to answer for. IT vendors have long tried to sell us what we do not need, but Guy Kawasaki introduced many techniques we see today.

Attended a free conference or user group with quality speakers lately? Receive free trade magazines? Java ones are funded by larger players in that space, Microsoft ones often by (indirectly) by themselves. There is great value in these resources, but please keep your eyes open for manipulation.

SOA is a primary one I refer too. Magazine after magazine had SOA emblazoned their front covers, many conference talks were around SOA.. it became the buzzword du jour for years. SOA used conservatively is fantastic, but from about 2002->2010 I saw project after project with SOA sprinkled around as if salt from a large salt-shaker. Re-factoring to remove/ short-circuit SOA was a key technique of mine – ‘strangely’ removing much of it led to much maintainable and performant code. Why was SOA so heavily hyped by our industry? Distributing code leads to more servers; which increases hardware sales and more importantly server license sales. Server license sales are where the big-players make their real money. Costs of even a smaller companies SQL Server or Oracle licensing soon ring up to millions-of-dollars. High costs accompany CRM, ERP, TFS, Sharepoint and most other common sever based software.

Younger Architects are particularity susceptible to vendor influence. Younger people are more easily influenced, tempted by implicit promises etc and soon saddle their projects with many trendy buzzwords. How could a project possibly fail if every buzzword is hot on Reddit and our vendor representatives cannot stop talking about them?

Embedding consultants/ evangelists into large companies is very common. Received free conference tickets from a vendors? Free training and elite certifications? Sorry to lift the curtain, but clearly these are tricks which exist to coerce you into using a particular technologies.. and buy more severs! The consultants and evangelist are of course generally not evil, but they are trained to believe in what they are selling.

Become a Solution Architect

Being the Architect certainly works. Every project I had significant control over was a tremendous success. Unfortunately most projects select their Architects based on popularity with management and other non-technical attributes.

Frequently senior leadership believes Solution Architects should manage multiple projects and not be hands-on with code. This is a mistake. Personally I have turned this role down several times as it leads to poor Architectures – as stated above I have caught myself bluffing before in this role. Solution Architects should not span multiple projects. Staying hand-off for long leads to believing marketing of technologies. Marketing is often far from reality.

Most companies in-house Architects tend not to be the strongest technically. Senior leadership looks for softer skills – can they convince/ bully others, have a large physical presence etc. Notice how often we see tall white male Solution Architects? ~80%+ of the time yes? When this is not the case the Architect is almost always technically sound – because they attained the position on technical merit. All too often leadership looks for someone ‘with weight to throw around’ – at the Fortune 10 discussed above virtually all ‘thought leaders’ in our department had a large physical presence, and shouting at subordinates was second nature. It was amusing when I was asked to review the work of the two worst because so many people were complaining about them.

Conclusions

Hopefully it is clear that we must reduce the number of technologies in our projects. For the foreseeable future it is unlikely we can return to the stability/ predictability of pre-Internet/ tech-boom days.

This post is far longer than my notes/ original outline predicted. In future I will partition posts into more digestible subtopics with more focus on how we can improve. There is good information here, so under time-pressure I decided to publish as-is. Being between contracts Angular and Node are calling my name. These two appear the most likely to emerge as victors from current JavaScript framework fragmentation.